Imagine a scenario where a high-potential leader asks an AI-driven coaching app for advice on handling a conflict with a team member. The AI, analyzing thousands of management textbooks, suggests a perfectly logical, assertiveness-based script. The leader uses it, but the conflict explodes.

Why? Because the AI missed the context. It didn’t account for the team member’s cultural background, the leader’s own emotional state (shadow), or the delicate systemic dynamics of the organization. The advice was “correct” on paper but developmentally disastrous in practice.

As artificial intelligence begins to scale leadership development, we face a critical juncture. While organizations like the International Coaching Federation (ICF) and institutions like Harvard Business Review are establishing necessary baselines for data privacy and algorithmic fairness, there is a deeper layer that often goes unaddressed: the developmental ethics of AI.

In the world of integral coaching, where we look at the whole person—mind, body, spirit, and system—building ethical AI isn’t just about preventing harm. It’s about designing systems that genuinely support the complexity of human transformation without flattening the human experience.

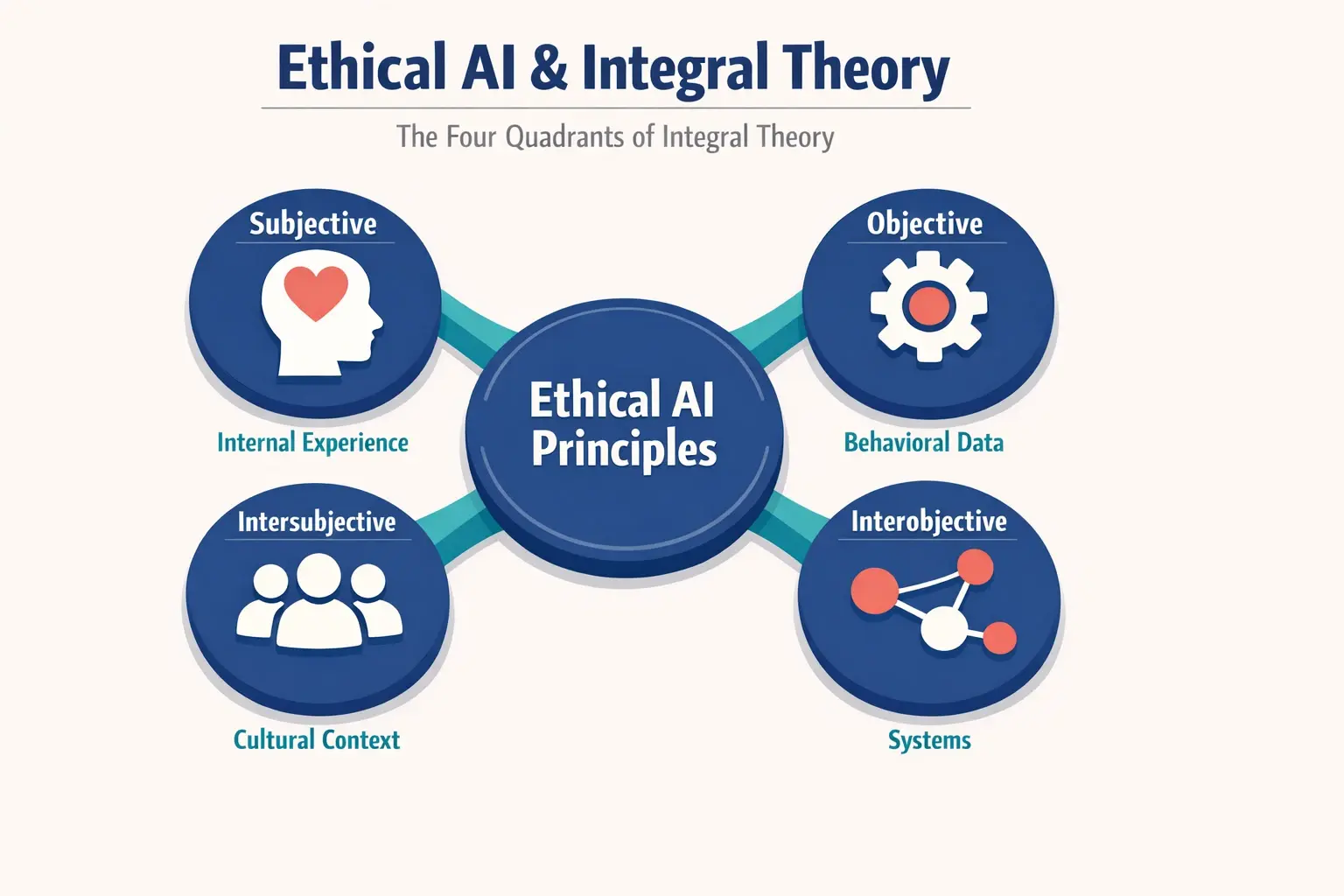

Beyond Data Privacy: The Four Quadrants of Ethical AI

To create truly supportive tools for personal and professional growth, we must look beyond standard compliance. Integral Theory provides a robust map—the Four Quadrants—to ensure we are designing AI that respects the full spectrum of reality.

1. The Upper-Left: Protecting Interiority and Subjective Experience

Most AI ethics discussions focus on data security. However, in developmental interventions, we are dealing with a user’s “interior”—their private thoughts, fears, and aspirations. Ethical design here means respecting cognitive liberty.

An AI should never manipulate a user’s emotional state solely to increase engagement metrics. For example, nudging a user to feel “productive” (a state) at the expense of necessary reflection or grief violates the integrity of their interior journey. The system must be designed to hold space for the user’s subjective reality, not engineer it.

2. The Upper-Right: Behavioral Accuracy vs. Developmental Truth

In the objective realm of behavior, AI excels at tracking actions—steps taken, goals met, time spent. However, a predictive analytics for leadership model might flag a pause in activity as “disengagement.”

From an integral perspective, that pause might be a crucial moment of “waking up” or deep integration. Ethical AI design requires “developmental dampeners”—algorithms that don’t penalize non-linear growth. We must ensure we aren’t training leaders like lab rats to hit buttons, but rather supporting authentic behavioral change that aligns with their internal values.

3. The Lower-Left: Cultural Fit and Intersubjective Fairness

This is where bias often hides. AI models are trained on vast datasets, often dominated by Western, individualistic management philosophies. If an AI coach interacts with a user from a collective-oriented culture, its “standard” advice on self-promotion or direct conflict resolution might be culturally corrosive.

Ethical design involves “cultural humility” in code. The AI must recognize that there is no single “right” way to lead. It needs to adapt to the integral leader‘s cultural context, validating diverse ways of relating and communicating rather than imposing a monocultural standard of success.

4. The Lower-Right: Systemic Impact and Organizational Health

Finally, we must consider the system. If an AI tool optimizes an individual’s performance but ignores the team’s cohesion, it creates systemic toxicity.

For instance, in a decentralized organizational structure chart, power dynamics are fluid. An AI trained on hierarchical data might advise a leader to “take charge” in a way that breaks the delicate mesh of a decentralized team. Ethical AI must possess “systems awareness,” understanding that an intervention for one person ripples out to affect the whole organization.

The Autonomy Paradox: Nudging vs. Disabling

One of the most profound ethical challenges in developmental AI is the tension between assistance and dependency.

We use AI to help leaders make better decisions. But if the AI provides the answer every time, are we actually developing the leader? True growth—what we often explore in integral coaching certification—requires the struggle of sense-making. If an algorithm removes the struggle, it may inadvertently arrest development.

Designing for “Friction”

Counter-intuitively, ethical AI in this space sometimes needs to be less efficient. Instead of saying, “Here is the email you should send,” a developmental AI might ask, “What outcome are you trying to achieve with this email?”

This “Human-in-the-Loop” approach preserves agency. It ensures the technology remains a scaffold for the user’s consciousness rather than a crutch that causes their critical thinking muscles to atrophy.

The Problem of “Shadow” in Algorithms

In Integral Theory, “shadow” refers to the unconscious aspects of our personality—the traits we deny or hide. A human coach is trained to spot shadow: the hesitation in a voice, the projection of blame onto others.

AI, largely text or logic-based, struggles here. A significant ethical risk is that AI might validate a user’s shadow rather than challenge it. If a manager constantly complains about “lazy employees,” an AI trained to be supportive might reinforce that narrative (“It sounds like you are carrying a heavy load”) rather than inviting the manager to look at their own leadership style.

Ethical design principles for developmental interventions must include limits of competence. The system must recognize when a conversation has hit a psychological depth that requires human intervention and gracefully hand off the user to a qualified professional.

Navigating Bias in Developmental Lines and Levels

Standard AI bias checks look for demographic fairness (race, gender, age). Integral AI ethics must go further to check for developmental bias.

- Stage Bias: Does the AI assume that “Strategic/Systemic” thinking (often associated with later developmental stages) is always better than “Rules/Process” thinking? Ethical AI meets the learner where they are, honoring their current stage without judgment while inviting the next step.

- Line Bias: Does the tool over-prioritize the “Cognitive Line” (thinking/logic) while ignoring the “Emotional Line” or “Moral Line”? A truly holistic tool must recognize that a CHRO or leader needs balanced development across multiple intelligences, not just intellectual processing.

A Framework for Leaders and Developers

Whether you are procuring a platform for your organization or building one, applying the Ken Wilber integral approach to management company leadership requires a new set of evaluation criteria.

The Integral AI Safety Checklist:

- Transparency of Theory: Does the platform explicitly state what developmental framework it uses? (e.g., Is it purely behavioral, or does it include mindset?)

- Holistic Data Governance: Are user journals and reflections treated with higher security protocols than standard usage data?

- The “I Don’t Know” Protocol: Is the AI programmed to admit when a user’s query is too complex or sensitive for an algorithmic response?

- Developmental Diversity: Was the AI trained on data from diverse developmental stages and cultural backgrounds?

Frequently Asked Questions

Q: Can AI replace a human coach ethically?A: In an integral context, no. AI is excellent for “Translational” coaching (skill building, habit tracking, information retrieval). However, “Transformational” coaching—which involves shifting identity, processing trauma, and deep meaning-making—requires human-to-human resonance and intersubjective trust that a machine cannot ethically or functionally replicate.

Q: What is the biggest risk of using AI in leadership development?A: The risk of “flattening.” Humans are multidimensional. If an AI model reduces a leader to a set of data points and behavioral outputs, it denies the complexity of their consciousness. This can lead to a workforce that is efficient but lacks depth, wisdom, and genuine creativity.

Q: How does this relate to Integral Theory?A: Integral Theory reminds us that every technological advancement (External/Right Hand Quadrants) must be matched by a deepening of consciousness and culture (Internal/Left Hand Quadrants). Ethical AI design is the practice of ensuring our tools support that internal deepening rather than distracting from it.

Q: Is it safe to use predictive analytics for HR planning?A: Predictive analytics for leadership can be a powerful tool if used to identify potential and support needs. The ethical danger arises when it is used deterministically—to pigeonhole employees or deny them opportunities based on a probabilistic model. It should inform human judgment, not replace it.

The Path Forward

The integration of AI into developmental coaching offers a tremendous opportunity to democratize access to growth. We can bring self-reflection tools to millions who might never afford a private coach. However, the difference between a tool that “programs” people and a tool that “liberates” them lies in the design ethics.

By anchoring our technology in the comprehensive understanding of integral coaching, we can build platforms that don’t just mimic human conversation, but actually revere the human journey. The goal is not to create artificial wisdom, but to use artificial intelligence to support the unfolding of authentic human wisdom.